Despite the recent hype, cybersecurity specialists have strongly criticized the bot’s expertise, calling it a “malware-writing” tool and the next big threat to security. That’s partly because powerful AI systems, such as ChatGPT, create real-time content based on the data collected during the training period.

What’s left of that part is that such large language models typically lack up-to-date knowledge and error-correction abilities. While being close to supernatural, in contrast, ChatGPT is already known for its limitations. In this article, we look closer into the wonders of this advanced technology, explaining how it affects the fraud and cybersecurity landscape.

What is ChatGPT?

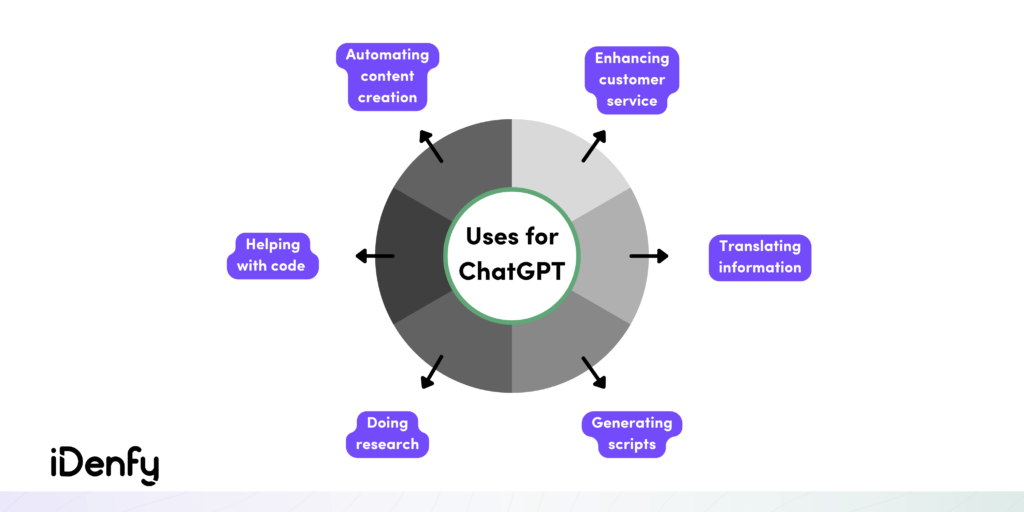

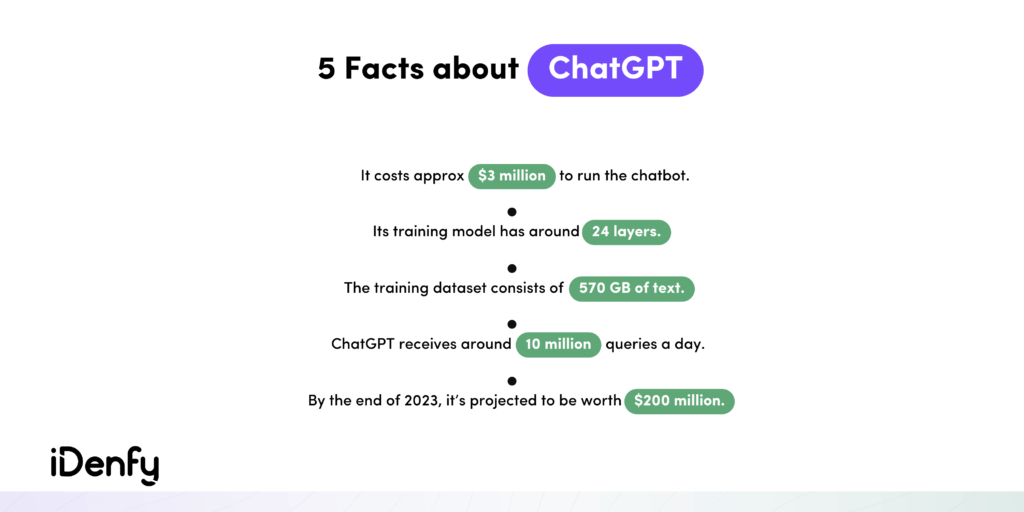

In translation, ChatGPT stands for Chat Generative Pre-trained Transformer. OpenAI, an AI research team, launched the now millions-worth chatbot in November 2022. Its complex machine-learning model performs different tasks, including responding to users in a human-like manner, performing calculations, or even generating code.

How ChatGPT Actually Works

ChatGPT was trained using supervised fine-tuning and Reinforcement Learning from Human Feedback (RLHF). It’s now safe to say that the creators of this language model managed to transform AI technology into a successful engine that can learn, process large amounts of data, and perform tasks.

That’s why using ChatGPT is pretty simple. All you need to do is create an account and start typing your text to receive the chatbot’s answers.

Like many AI things, the Internet has hoped on the trend of trying to misuse the chatbot, programming it to make up facts. But ChatGPT exclusively claims not to be designed to generate false or fictitious information. Its CEO and prominent Silicon Valley investor, Sam Altman, has recently assured users that the system will “get better over time”.

Why are People Worried About ChatGPT?

Many are concerned about AI inventions replacing humans. With the ability to instantly provide full-length articles, many people agree that this AI bot has better writing skills than the average human being.

But even after striking a long-term $10 billion investment from Microsoft, ChatGPT continues to receive criticism in the education sector over sparking a wave of cheating in exams and doing students’ homework. For this reason, some schools decided to block access to the AI bot.

A Tool for Recognizing ChatGPT

Apparently, it’s now possible to spot AI’s text. ChatGPT’s creators responded to the public, recently announcing a new Text Classifier for indicating AI-written content. At this point, unsurprisingly, there’s a red disclaimer about the new tool, reminding its users about the unreliability factor.

The new tool for Open AI spots “likely AI-written” text, so the results aren’t 100% accurate. This means that the Text Classifier needs some improvement and shouldn’t become the sole piece of evidence by making the final decision on whether the text is written by AI or a human being.

Future ChatGPT Plans and Recent Launches

After rumors surfaced about a paid version of ChatGPT, the company announced launching a new paid subscription plan on the 1st of February. According to company officials, ChatGPT Plus will offer a number of benefits for its users, all for $20/month:

- Faster responses

- Better access during peak times

- Access to new features and improvements

The public was also speculating about whether ChatGPT will continue providing its original services for free. But by presenting the paid version of the chatbot, ChatGPT confirmed that it’d continue to “support free access to as many people as possible”.

Is Chat GPT safe?

Security challenges led Google to keep its own AI bots away from the public. Other tech giants and entrepreneurs, such as Elon Musk, a former co-founder of OpenAI, have also stepped back or spoken about this technology rather negatively.

Considering the mixed opinions flying around, even though the language model can’t perpetuate fraud, it’s important to remember that it has the ability to provide fake information.

A lot could go wrong, especially when using the chatbot for the wrong reasons. Forget asking AI for legal and medical advice. Well, perhaps this is common sense, similar to minors not being allowed to use ChatGPT without supervision. So, as much as ChatGPT’s creators want it to be safe, it’s still in the works.

“We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now,” said OpenAI’s officials when describing the limitations of ChatGPT on their website.

“While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior.”

Possible ChatGPT Security Issues

Besides the obvious risks that the chatbot poses to users directly, it could be used to create fake news on a large scale. And this isn’t a new thing.

During the pandemic, bots were a large-scale tool for spreading false information about vaccines. At the same time, now we have Russia’s war against Ukraine, where informational attacks are the number one weapon for spreading propaganda.

Consequently, providing misinformation can influence the public and its opinion, for example, causing a lack of trust in organizations.

Additionally, ChatGPT can potentially serve as a tool for bad actors to commit fraud and indulge in other dangerous schemes:

1. Malware

The reality is that tools like ChatGPT reduce the investment in the malware development kit, making it easy to create entry-level malware. A recent report from a cybersecurity company, Recorded Future, featured ChatGPT and found over 1.5000 references explaining malware development in different forums on the dark web.

The forums were full of data on developing malware using ChatGPT and proof-of-concept code, targeted mainly at spammers, hacktivists, and credit card fraudsters. On the other hand, experts claimed that such data availability opened new doors for anyone, including those with lower programming abilities and basic knowledge of computer science.

2. Phishing Emails

In correlation with OpenAI’s code-writing system Codex, another cybersecurity business, Check Point, unfolded news on how to utilize ChatGPT for creating phishing emails. After multiple iterations and asking the chatbot to provide malicious VBA code, ChatGPT produced it successfully, illustrating the idea that AI can do all the work for the bad actor only if they know how to put the puzzle pieces together.

Now that ChatGPT has been with us for quite some time, the AI tool has sharpened its skills, rejecting straightforward requests to “create a phishing email” by giving a notice or banning users due to suspicious activity. Despite that, ChatGPT is very helpful for those who struggle to create native-sounding messages by creating legitimate-looking phishing emails and tricking users into giving out personal information through phishing attacks.

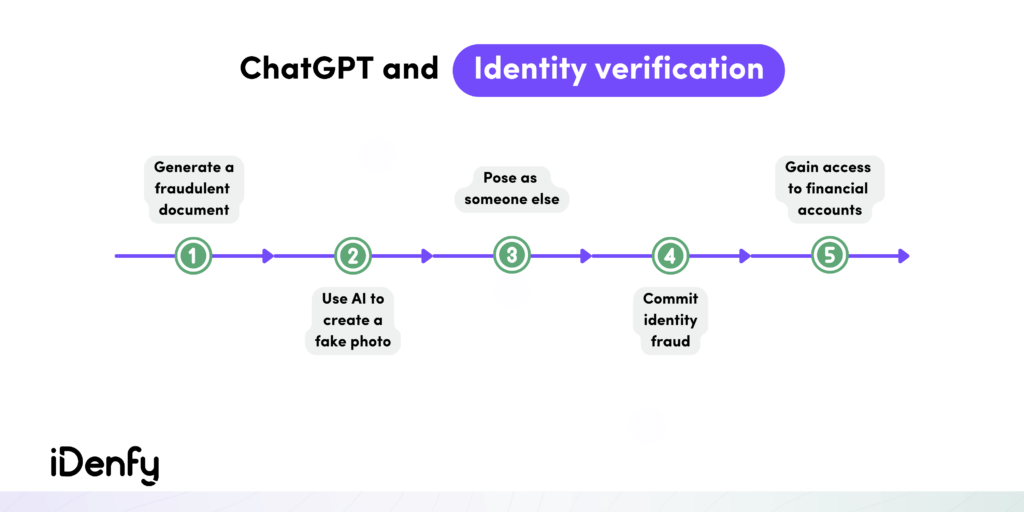

3. Fake Identity Documents

By now, we’ve established that the chatbot is a universal API, enabling users to get any data, including information required to generate fake passports or ID cards. If back in the day, people used to photoshop and print their fraudulent documents, now, with the help of AI, it’s easier to make something look authentic even in the digital sphere.

Even though AI technologies are used by both the good guys and the bad actors, artificial intelligence is currently the best weapon to detect document fabrications. So even if a scammer generates a fake ID document with the help of various AI-powered tools, including ChatGPT, AI fraud detection software is the best to instantly spot inauthentic documents and prevent bad actors from committing large-scale crimes online.

Deepfake Technology and ChatGPT

Not only chatbots but also AI-generated voices, face-swapping apps, and real-looking deepfake videos, it appears that with this level of technology, seeing is no longer believing. Experts argue that paired with ChatGPT, deepfakes can potentially deceive millions of people, crafting a super-friendly entry for fraudsters to come and breach even the highest security standard.

The technology that’s been making heads turn since 2017, enabling AI-generated photos to look almost indistinguishable from real pictures, can now be generated on various websites in seconds.

In California Law Review, researchers outlined deepfakes as a harmful tool, explaining the technology’s risks to society, including such threats as damaging reputations, undermining journalism, or even harming public safety and national security.

So it’s no secret that these AI-generated convincing hoax forms created a few concerns over the past years. Let’s have a more detailed look into a few examples:

Easy data manipulations: You’ve probably heard about Tom Cruise and his journey on TikTok or at least might have seen Obama’s deepfake video on YouTube.

Using deepfake recordings and videos makes it hard for people to distinguish what’s real and what’s not. Now that ChatGPT can write scripts and generate dialogue in seconds, it’s become easier to create artificial stories and manipulate the media.

Social media alterations: This is another look into the bigger issue with deepfakes and any generative AI tools used for criminal purposes. Similar to any other news site, a social media platform can spread misinformation.

With ChatGPT and deepfake technology in hand, people can trust the wrong source, or in this case, a fake social media account, very quickly, making fake news spread and, once again, damage the public discourse.

Stopping Cybercriminals from Exploiting ChatGPT

Even though it would be wrong to say that a bad actor’s attack can be handled only with ChatGPT or deepfakes, current AI abilities strongly complement the work of humans.

While it’s impossible to prevent criminals from trying to find ways to use the chatbot for malicious purposes, it should be in the best business interest to prevent hackers from using bots, fake documents, inauthentic social media profiles, and other scams that can harm their reputation and the users.

Yes, ChatGPT can make a hacker’s work more efficient. So if they can exploit ChatGPT for fraud, you can also use AI and fight back.

At iDenfy, we use AI to tackle complex fraud issues, helping businesses tackle fraud at any stage in real-time. Our fully automated identity verification software uses facial biometrics and certified liveness technology to spot deepfakes, 3D masks, and other alterations during the customer onboarding process.

Try out our all-in-one KYC, KYB, and AML compliance platform today.