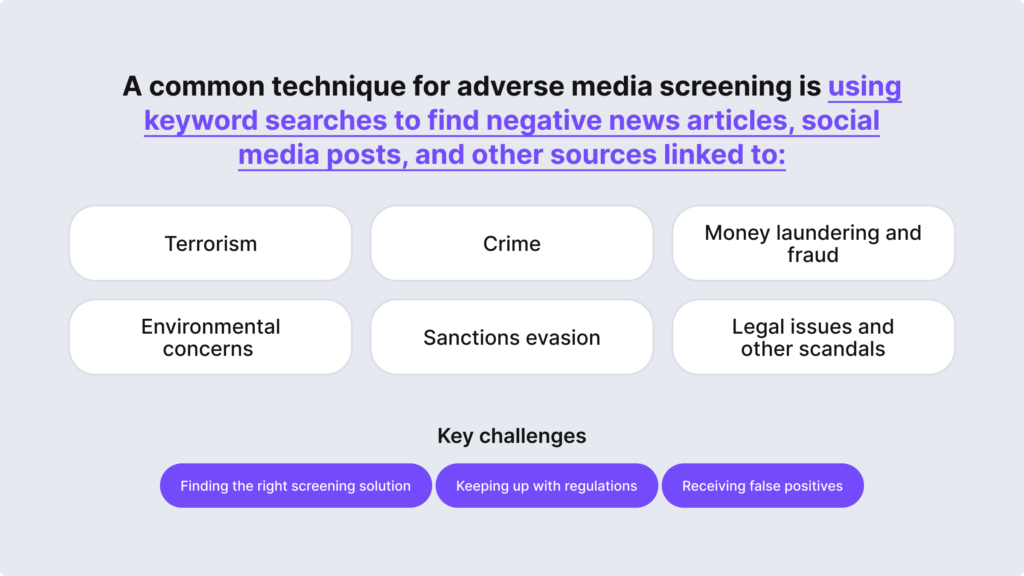

Adverse media, also known as negative news, is relevant public information that is collected from various news sources that are used to identify crime, such as individuals or companies associated with money laundering, bribery, sanctions evasion, drug trafficking, organized crime, tax evasion, financial fraud, and similar offenses. While screening newspapers and analyzing thousands of articles might seem inconvenient, adverse media has been around for a long time, serving as a vital anti-money laundering (AML) compliance measure and an effective risk management measure for many regulated entities.

Regulatory bodies, such as the Financial Action Task Force (FATF) or Financial Crimes Enforcement Network (FinCEN), recommend adverse media screening as a mandatory AML process for customers and third parties, especially for enhanced due diligence (EDD) and high-risk entities. This makes it hard for financial institutions to manage large amounts of data, especially if no automated tools are used. So, like with many compliance-related tasks, the main challenge is to build an efficient yet accurate and compliant risk management system, which includes reviewing multiple adverse media sources.

In this blog post, we’ll review the key adverse media strategies, the keyword search approach, how technology affects screening, and what challenges companies need to overcome when it comes to AML compliance.

What are Some Examples of Adverse Media?

There are risk factors that are clear, but in general, it depends on the institution and how it decides what qualifies for adverse media and whether it makes the customer high-risk. For example, a person on a sanctions list is always at a higher risk.

Common examples of risk factors that most companies include in their adverse media practices are:

- Political exposure. This includes Politically Exposed Persons (PEPs), who are high-ranking government officials, their family members, and close associates. Due to this sort of exposure and status, these individuals are more likely to be linked to corruption and other financial crimes.

- Sanctions. Sanctions change, and sometimes, high-profile sanctions actually make the news before they are added to some official sanctions lists. In this case, adverse media screening is a good solution to stay in line with recent updates.

- Geographical risk. Some countries or regions are considered high-risk due to changing political conditions, economic instability, or high crime rates like money laundering or corruption. For example, the FATF identifies places like Iran and Myanmar as high-risk. As a result, clients from these areas should be carefully monitored.

- Crime. This is the essence of adverse media since it’s important to find customers and other companies linked to fraud, terrorism, money laundering, financial crime, and other similar offenses. The media often covers major cases like this.

Some companies can accept the risks that come with certain business relationships, such as high-risk politicians. In this case, high-risk clients require enhanced due diligence (EDD) measures, including adverse media screening and ongoing monitoring to prevent money laundering and other illicit activity.

Related: What is the Difference Between CDD and EDD?

What Regulations Mandate Adverse Media Screening?

Back in 2018, FinCEN introduced a new requirement for customer due diligence (CDD) — CDD Final Rule. This compliance rule required regulated entities not only to report potential suspicious activity by their clients but also to continuously monitor media for new negative news.

In practice, this means companies should implement AML measures and conduct ongoing CDD, as well as verify each customer during the onboarding process and throughout their entire business relationship.

Adverse media screening should be treated as a risk assessment measure that helps companies decide if the risk is significant enough to end their relationship with a particular customer. This is very important for fraud prevention in general and sanctions compliance, which also mandates global sanctions screening and sticking to different sanctions regimes.

The FATF has also created a list of recommendations to help businesses identify high-risk entities and individuals, recommending adverse media checks and stressing other important rules, such as the need to monitor cryptocurrencies and other virtual assets.

Why is AI Used in Adverse Media Screening?

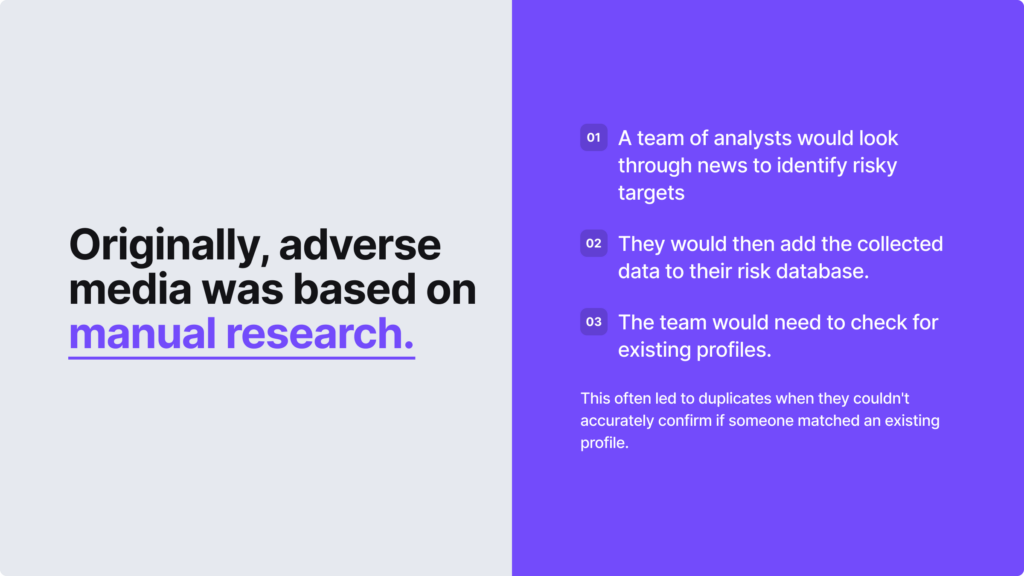

Previously, adverse media checks were conducted manually, and naturally, this meant companies needed to have a highly skilled analyst team. Checking news outlets was a time-consuming process and difficult due to the large number of publications and different news outlets that needed to be reviewed.

With such a manual review process, false positives were an issue due to some customers having the same common names. Sometimes, the adverse media information was hardly accessible due to a paywall or not available in certain languages, especially when talking about restricted regions. Without any sort of automated tool, businesses also needed not only to find the adverse media information but also to maintain detailed records to assess if the match posed an actual risk.

In this sense, AI-powered adverse media software makes the screening process easier to handle and allows businesses to address financial crime risks in real-time. According to the mentioned major regulatory bodies, such as the FATF, this process is vital for companies to maintain a risk-based approach to AML compliance. So, by conducting thorough adverse media keywords research, companies can better identify and assess money laundering risks.

What Do Keywords in Adverse Media Mean?

As scanning articles manually became more and more inefficient and adding potential matches to endless databases became a hassle for human analysts, search engines came into the picture. This allowed us to use keywords in adverse media and scale the process, minimizing major gaps, for example, such as navigating the keyword research in different languages. To this day, this process is used, and some third-party AML service providers create adverse media databases this way.

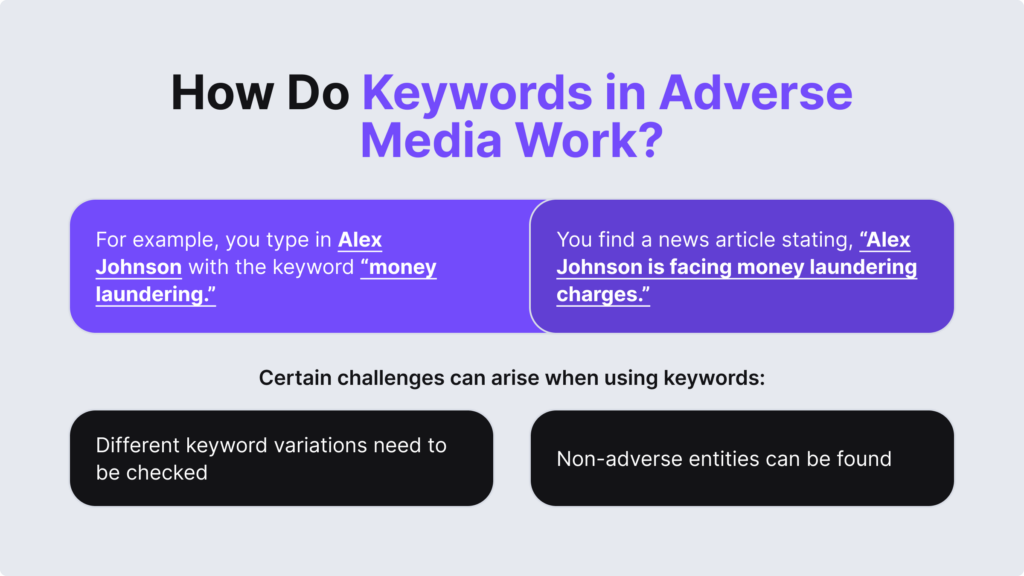

The most common question that most have about adverse media and keywords is that it’s not clear what kind of keywords you should include in your search exactly. Additionally, simple keywords like “fraud” or “money laundering” can mean both a high-risk situation and result in completely inaccurate information.

For example, if you search for your customer, John Doe, and type in the keyword “money laundering” next to their name, you might find an article with a headline of “John Doe was found guilty of money laundering.” So, in this sense, the keyword search worked, and you identified an AML risk. Despite that, it’s not clear if you found the right John Doe.

Below, we look into the key problems around keywords in adverse media in more detail:

1. There are Similar Keywords and Synonym Variations

It’s not enough to search only for one keyword because similar ones pose the same risk that you want to identify in your organization. For example, “scam,” “scammer,” and “scammed” are linked the same way as “fraud” and “fraudulently.” So, if there’s a headline in the media — “John Doe scammed a financial institution, according to district attorneys.” — you should look into it on a deeper level and include extra keywords and balance out different keyword variations, including synonyms like “swindle” or “deceit.”

Related: Fraud Detection — What You Need to Know

2. You Can Find Unrelated Entities at the Same Time

There can be people with the same name, or adverse media mentions can turn out to be false positives. For example, if the keyword search in adverse media results in you finding a headline like “John Doe was found guilty of money laundering, stated public prosecutor Daisy Jones.” So, in this case, the search was successful, but the keyword was irrelevant, and the person mentioned in the article was not a criminal; they were a legal consultant, journalist, a victim, etc.

This goes to show that it’s important to see the whole context when using keywords in adverse media. Sometimes, keyword searches can be limited to certain phrases or exact words that the algorithm is set to detect, which creates a limitation in this process. As a result, compliance teams should focus on extra relevant terms and different variations. The same goes for trying to find the right person, for example. If it’s “Sandy Holmes,” you should also use keywords like “S. Homes.”

3. Some Search Keywords Have a Different Meaning

Since adverse media requires looking through different sources and all sorts of genres, searching for keywords can result in misleading results. That’s because the same word or collocation can have different meanings, especially since some idioms or words are taken out of context.

For example: “Emily Davis crushed it on Saturday. She shot the ball out of the park twice, leaving her victim, pitcher Sarah Lee, feeling totally defeated.” Similar examples include words like “launder,” “charged,” or “dumped.” This highlights the need for human intervention, at least at some point, since compliance analysts can’t rely on automation only. However, when combined with proper screening software and a skilled analyst team, this adverse media keyword search method is accurate.

4. Language Barrier Becomes an Issue

Different languages and document formats have been a well-known headache for compliance teams. When it comes to keywords in adverse media, the same principle applies. For example, in Spanish, “fraud” appears as the keyword “fraude” but there are other alternatives like “estafa” that shouldn’t be missed.

On top of that, some local language sources may not be integrated into the solutions that you use in the database, creating further challenges. Other nuances, like new slang words that evolve over time or jargon words, also make it difficult to keep up-to-date with the latest adverse media keyword search practices.

5. Irrelevant Noise in the Search Results Can Happen

Keywords in adverse media can generate a list of flagged content results due to the vast amount of data that needs to be checked. This makes it a time-consuming process due to the filtering that needs to be done afterwards. That’s why too many irrelevant keywords aren’t a good option either; they can cause delays that make it harder to focus on real risks.

So, for example, if you’re assessing John Doe and find multiple adverse media results, you should determine if all of them belong to the same person. So, the main question should not go around what kind of adverse media keywords you should use but how you can actually minimize the “noise” or irrelevant information later.

How to Automate Keyword Research in Adverse Media?

While manually looking for keywords in adverse media can be effective, this isn’t the go-to approach if your business wants to scale. That’s because analysts need to work consistently: collect keywords, look for negative news, filter out risks, identify which of them are false positives, then gather this data and put it into multiple categories. Sometimes, this leaves room for error and biased decisions, which can be costly.

With tools like Google Translate, ChatGPT, and search engines in general, RegTech service providers today rely on AI and machine learning and have developed automated adverse media screening solutions to make this job easier. Such a shift helps validate information easier since the software automatically fags adverse media results, categorizes them, and assigns risk scores, ensuring that only relevant information reaches compliance teams.

So, if you want to remove the hassle around manual keyword searches, opting for an automated solution is the best way to do that.

iDenfy’s Adverse Media Screening Solution

iDenfy’s solution uses information that is collected from different search engines (Google, Bing, and Yahoo) to deliver accurate negative news results. The software screens over 15,000 sites in 195 countries, including information pieces like police sites, tax office sites, criminal investigation sites, most wanted lists, and local and global news sites.

Real-time updates are also available. Our adverse media solution supports keyword templates in over 12 languages, detecting typos, automatically translating text, and using fuzzy matching to find negative news linked to your entered keyword, even if it doesn’t exactly match. The adverse media screening can be customized to narrow the results, such as focusing on a specific region, helping you minimize false positives and deliver more accurate keyword search results.

Let’s talk further or sign up to get started right away.